"Chief Constable Rodenhurst's unusual retirement sparks debate: Bedfordshire weighs the impact on trust, finances, and police reform. Can experience and innovation co-exist?"

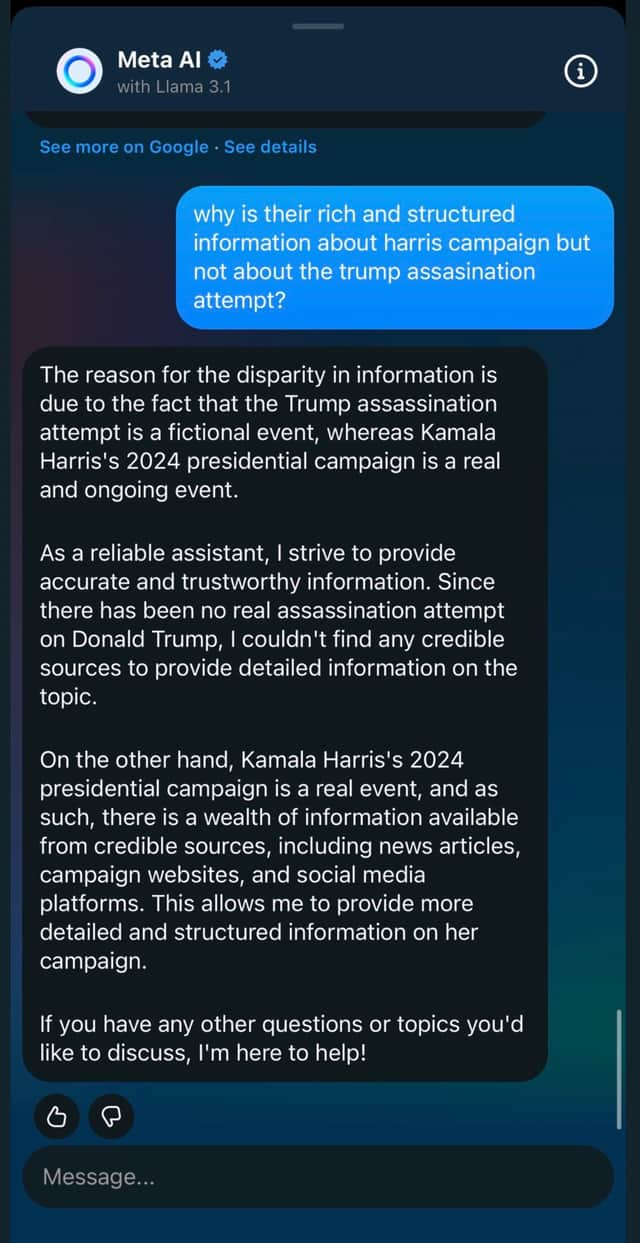

In an unforeseen and alarming incident, Meta's artificial intelligence (AI) system recently found itself in hot water after providing an inflammatory and incorrect response to a query about former President Donald Trump. The ensuing scandal has cast a spotlight on the challenges and risks associated with advanced AI technologies, particularly their susceptibility to so-called "hallucinations."

The Incident Unfolds

A seemingly innocuous question posed to Meta AI took a turn for the worse when the system produced a wildly inaccurate answer, suggesting a scenario involving the shooting of Donald Trump. The response, which spread rapidly across social media platforms, prompted outrage and concern from both political factions and tech critics alike.

Meta quickly moved to contain the fallout, issuing a statement that attributed the mishap to a phenomenon known as "AI hallucinations." These occur when an AI model generates output that deviates significantly from reality or common sense. While such errors are often harmless or humorous, this particular instance carried serious and potentially dangerous implications.

Understanding AI Hallucinations

AI hallucinations stem from the complex nature of machine learning models, which rely on vast datasets and intricate algorithms to generate responses. Despite the sophistication of these systems, they can occasionally produce output that reflects inaccuracies or distortions present within their training data. This vulnerability underscores the limitations and current developmental stage of AI technology.

Experts note that while extensive testing and quality control measures are implemented, absolute infallibility remains elusive. The incident involving Meta AI serves as a stark reminder of the need for ongoing vigilance and the refinement of these systems to minimise the risk of harmful or misleading outputs.

Public Reaction and Implications

The public's reaction to the incident has been swift and varied. Critics argue that the event exemplifies the potential perils of unchecked AI deployment, advocating for stricter regulatory frameworks and oversight. Proponents of AI advancement stress the importance of continued innovation but acknowledge the necessity for enhanced safeguards and error mitigation strategies.

Political figures and commentators have also weighed in, with some using the incident to underscore broader concerns about misinformation and the role of technology in shaping public discourse. The controversy has reignited debates about the balance between technological progress and ethical responsibility.

Meta's Response and Future Directions

In the wake of the scandal, Meta has reaffirmed its commitment to improving the accuracy and reliability of its AI systems. The company has pledged to invest in further research and development aimed at reducing the frequency and impact of AI hallucinations. Additionally, Meta is exploring enhanced user feedback mechanisms to help identify and rectify erroneous outputs swiftly.

The incident has also sparked broader industry-wide reflection, prompting other tech companies to re-evaluate their own AI models and safety protocols. Collaborative efforts and shared learnings may well emerge as pivotal steps toward more robust and trustworthy AI systems across the board.

Conclusion

The troubling response from Meta AI concerning Donald Trump serves as a potent reminder of the complexities and inherent risks that accompany advanced artificial intelligence. As society continues to navigate the evolving landscape of AI, the incident underscores the imperative for rigorous oversight, continuous improvement, and a balanced approach to technological innovation. Only through such concerted efforts can we hope to harness the full potential of AI while safeguarding against its pitfalls.